While the data often comes from databases, being able to efficiently working with files remains a critical capability. We are committed to providing the best possible experience for working with files, no matter whether they reside on a Windows share, NAS, S3, or elsewhere. By taking advantage of our technology, we have built a system that combines the best features from file manager, exploratory data analysis system, a search engine, an enterprise content management system, and a distributed computation platform. Here are some of the attributes of our file management system:

- Secure

- Indexable

- Searchable

- Deep-linkable

- Metadata support

- Extensible file previews

- Integration with scripting (both for preview and for performing operations on multiple files)

Other planned features:

- Quality control for uploaded files (for instance, checking CSV against a schema)

- Functions triggered on upload (for instance, image recognition)

- Metadata-driven virtual folder hierarchy

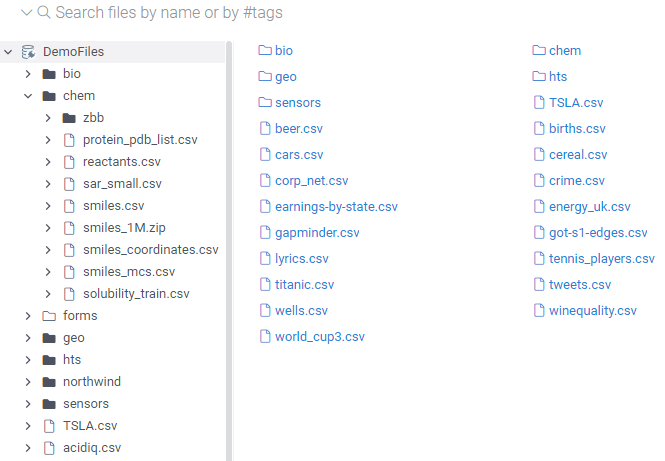

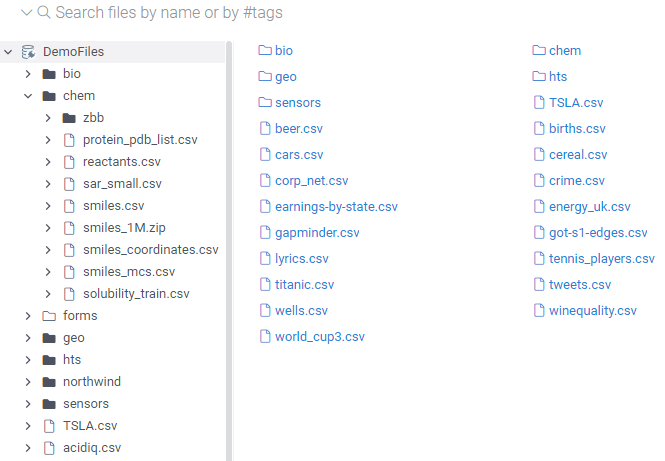

See it yourself by browsing available file share (Admin | Files), or by following direct links like this one: https://public.datagrok.ai/files/Demo.TestJobs.Files.Northwind

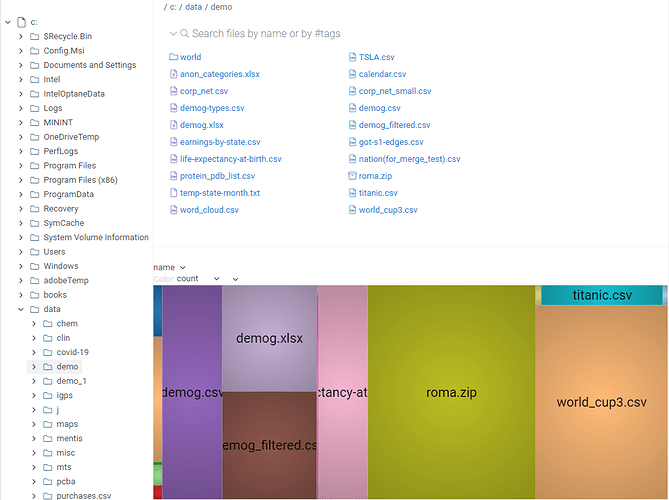

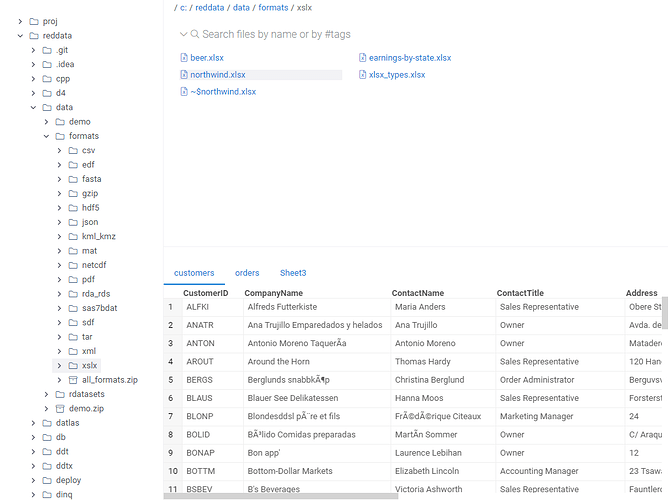

Now with the folder and file preview. File preview supports >30 popular file formats, including pdf, images, as well as >20 data formats natively supported by the platform.

Folder (area-coding by file size):

Excel file (with multiple sheets)

1 Like

Next to come: support for metadata, either entered manually or extracted automatically. We are planning to use Apache Tika for the automatic extraction of metadata.

Hi @Andrew.Skalkin Nice novel work - as usual. It provides an interesting thought for a particular use case where one may want their customers (or colleagues) to highlight the files they are interested in putting through a data pipeline and Datagrok simply imports 1 table per file and will provide an API endpoint for an upstream application to pull that data through Datagrok. The advantage I can see here would be the “integrated quality inspection” tool that Datagrok would be providing to the data owner. It’s where they organize, curate their information that then others can subscribe downstream. Just a thought  Adam

Adam

Hi Adam, welcome to the community, happy to see you here!

I like your ideas. We have previously thought about the possibility to specify and enforce QC rules for uploaded files automatically (such as adherence of the CSV files to the specified schema), and to trigger server-side actions (such as object recognition for pictures, or appending the content of CSV to the already existing relational database). These features are coming.

I think the ability to easily expose a REST API for querying a specified file (with the ability to filter by any attribute and aggregate results) as you suggested would also be great. It could be done already by creating a query based on the CSV file, and executing that query via our REST API - but indeed, a seamless generation of the REST endpoint could be very useful. A similar concept is applicable to the relational databases as well (I was discussing it with people from the other company just yesterday, hope they will join the discussion). If done right, this could also serve as a Data Access Layer for applications, both Datagrok and external ones.

Yeah - totally - I guess I’m seeing Datagrok could provide the portal for data virtualization/access layer that data owners could use in lieu of other platforms to assemble then offer up to others for consumption. Within the Datagrok project - they maybe bringing in CSV and Relational Database and/or unstructured type data - but allowing them to create that signature of data for downstream consumption - like you mentioned - another Datagrok project or another tool all together.

Adam

Adam