I am trying to connect to our internal Azure blob - but don’t see the connector - suspecting I’m overlooking ![]()

Hi Adam, it appears we don’t have the Azure Blob Storage file connector at the moment, but it shouldn’t be hard to implement, stay tuned! ![]()

Hi,

Yep, if it’s machine readable, we can read it. It shouldn’t take a lot of effort.

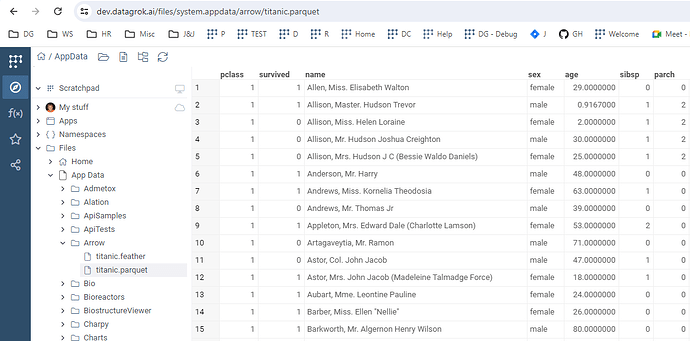

Ok we do use the parquet format (https://parquet.apache.org/) on both S3 and blob storage - can you read these into datagrok as well?

Does it work for you? Let us know if there are any problems with ingesting the parquet files

Oh that’s awesome! We’ll explore for sure! When writing our Parquet from the arrow package - it sends out an array of files (presume there’s some great logic - but don’t know it) so can we select multiple files and pull them in as said aggregate?

Hi @adam.fermier ,

Which Azure Blob Auth method you are using right now?

We are using the SAS (Shared Access Tokens) to connect to Blob.

Thanks! Working on it.

Implemented. You can now connect to Azure Blob Storage in Datagrok 1.18 (pending release).